What if 110 AI agents could investigate your entire project portfolio while you sleep?

I build a lot of things. WhatsApp bots, CRM dashboards, video processing pipelines, insurance automation tools — dozens of projects accumulating in my home directory. Some are thriving. Some are abandoned mid-thought. Some probably have security issues nobody noticed.

The problem isn't building. It's knowing what you've built.

So I built Night Research — an autonomous multi-agent system that investigates my 10 most recent projects using a pyramid swarm of ~110 AI agents, generates a full portfolio report, and sends me a WhatsApp summary. All while I sleep.

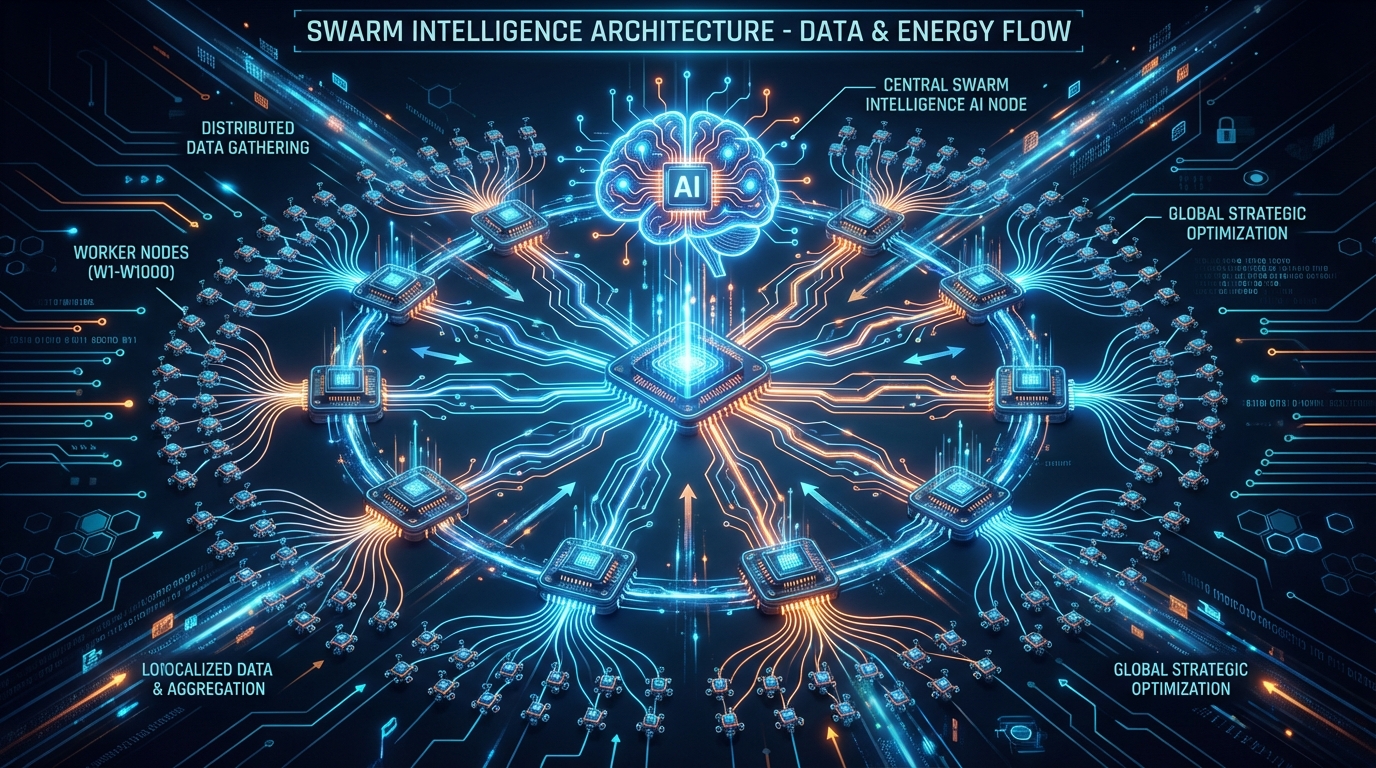

The Architecture: A Pyramid That Flattened Into Something Better

The original vision was a clean 3-tier pyramid:

- Tier 0: Main orchestrator

- Tier 1: 10 project agents (one per project)

- Tier 2: 100 question agents (10 per project)

Each project agent would spawn its own question agents. Beautiful hierarchy. One problem: subagents can't spawn subagents in Claude Code. Only one level of nesting is allowed.

This could have killed the project. Instead, it made it better.

The redesigned architecture uses a 2-phase fan-out from the main orchestrator:

- Phase 1 — Discovery: 10 Explore agents each understand one project and generate 10 tailored questions

- Phase 2 — Investigation: ~100 Explore agents each answer one question with evidence from the actual source code

- Phase 3 — Synthesis: Main agent combines everything into per-project reports + a portfolio analysis

- Phase 4 — Delivery: WhatsApp summary hits my phone

The main orchestrator now has full visibility into all 100+ answers and can do cross-project analysis that nested agents never could.

How It Actually Works

Step 1: Find the Projects

A bash command scans my home directory for the 10 most recently modified directories that look like real projects (they have .git/, CLAUDE.md, or package.json). System directories like Desktop, Downloads, and Library are filtered out.

Step 2: Discovery Agents Go Deep

Each discovery agent reads the project's CLAUDE.md, package.json, README, and directory structure. It determines purpose, tech stack, status, and complexity. Then it generates 10 specific questions tailored to what it found.

Not generic questions like "Is the code well-tested?" — but specific ones like "The project has 3 test files in __tests__/ but none for the API routes in src/routes/ — what's the test coverage gap?"

Step 3: 100 Question Agents Investigate

Each question agent uses Glob, Grep, and Read to investigate one specific question. They cite files, rate their confidence, and note side findings. They run on the haiku model for speed and cost efficiency.

Step 4: Synthesis

The main orchestrator groups all answers by project, writes per-project reports with risk assessments, then synthesizes a portfolio-level final report with cross-project insights and prioritized recommendations.

The Secret Sauce: Zero Traditional Code

Here's what makes this wild: the entire system has zero lines of traditional code. No JavaScript. No Python. No build step.

It's all natural language:

CLAUDE.md— orchestration rules and execution flowprompts/discovery-agent.md— prompt template for Phase 1 agentsprompts/question-agent.md— prompt template for Phase 2 agents

The "programming language" is English with structured output delimiters (=== SECTION ===). The "runtime" is Claude Code itself.

Cost: ~$2.50 Per Portfolio Audit

| Component | Count | Model |

|---|---|---|

| Discovery agents | 10 | Sonnet |

| Question agents | ~100 | Haiku |

| Synthesis | 1 | Opus |

| Total | ~111 agents | ~$2.50 |

Key Learnings

Constraints breed better design. The subagent nesting limitation forced a cleaner architecture with better observability. The main orchestrator sees everything.

Model stratification matters. Using Sonnet for discovery (needs reasoning) and Haiku for questions (just reading files) cuts cost ~60% with minimal quality loss.

Structured output > JSON for agents. The === SECTION === format is a sweet spot — human-readable but machine-parseable. JSON is too fragile when agents generate it.

Read-only by design, not by instruction. Using Explore agents (which lack Write/Edit tools) enforces read-only at the platform level. Prompt instructions alone aren't enough.

What's Next

Night Research is the foundation for something bigger: autonomous portfolio intelligence. Run it nightly, track trends over time, get alerted when a project needs attention.

Because the best time to audit your projects is while you're dreaming about the next one.

Built with Claude Code. The entire project — architecture, prompts, documentation, images, and this blog post — was created in a single conversation session.

Check it out: github.com/aviz85/night-research

כתיבת תגובה